Traditionally, atmospheric effects in games are rendered rather naively. Fog, for example, is even today in many titles created with simple blending to background color with linear falloff. While it works in some cases, actual light behavior in participating media (such as fog, dust, water, and any kind of atmosphere) is far from being that simple. Light interacts with particles in a complex way. Some percent of the light is traveling directly to the observer; part of the light is scattered into the observer’s view due to interaction with particles; and some percent of the light is lost (absorbed) in the media or backscattered, not reaching the viewer. This all happens depending on a wavelength of light, size and type of particles.

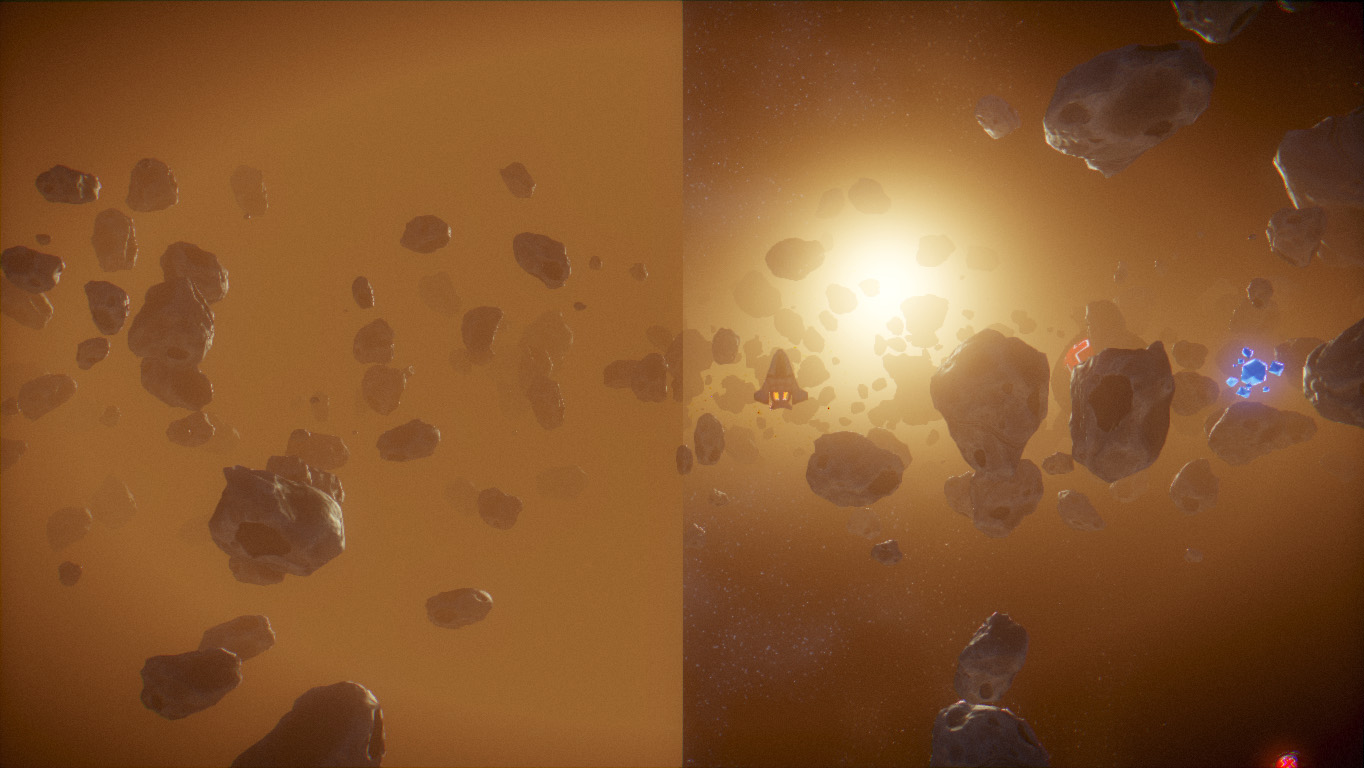

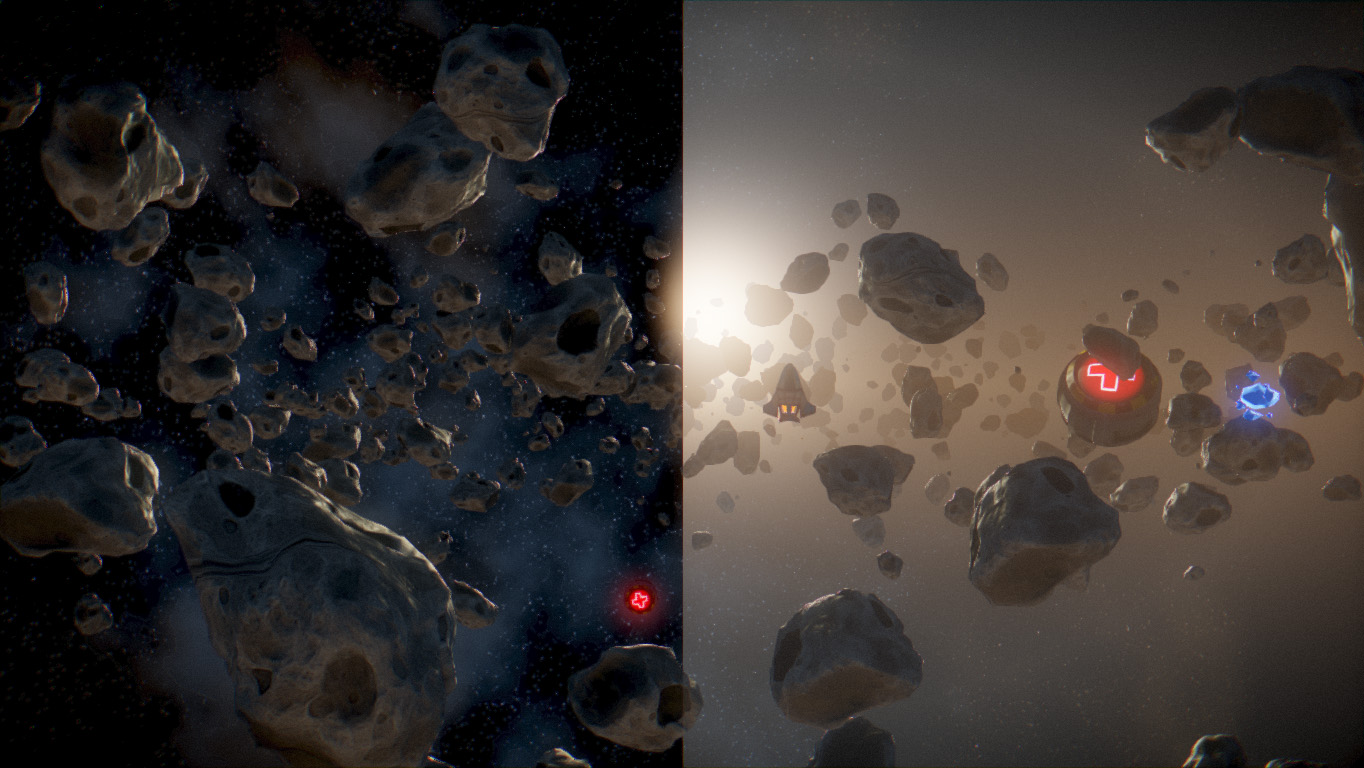

Classic fog model used in games is close to foggy, overcast day, with same perceived color for all view directions and observer positions. For SUPERVERSE, most of the levels being set in a dense asteroid field, with strong light illuminating scene from behind for more dramatic effect, using simple fog wasn’t good enough. Also, field may have vertical density closely related to gauss distribution with most of dust and particle concentrated in inner parts and less on the edge, gradually fading to zero.

Our background consist of pseudo-random generated starfield with several levels of perlin noise to create variation in color and nebula kind of effect. After that we lay down distant asteroids and other non-playing objects. Without dust/fog effect, scene would look too “flat” and it would be hard for player to differentiate between foreground (for gameplay important objects) and background scenery.

Our atmosphere is a combination of analytic single-scattering based on Crytek’s paper (here are some slides: http://developer.amd.com/wordpress/media/2012/10/Wenzel-Real-time_Atmospheric_Effects_in_Games.pdf) and volumetric fog with vertical falloff.

Scattering model doesn’t count for shadows in participating media. Shadowing creates what is commonly known as god-rays. Fast way to get a similar effect is to create a mask of objects in scattering volume (black asteroids in front of white space/background) and do radial blur centered in screen projection of light position. Blur can take several passes until “light rays” are long enough. Resulting image can then be applied over rendered scene with, for example, screen blending. Next update to SUPERVERSE will probably include similar effect.

More lights can be added, but with the cost of more computation per pixel. Local lights won’t affect all of the scene so having them rendered in additional passes on top of each other while using light’s volume to cut off pixel shader processing(similar to lights in deferred shading) is a good idea. If simulating only in-scattering, passes can be combined with simple additive blending. This is similar approach as local fog volumes in Cryengine. Adding low-frequency light contribution (for example from background) would create some variation and increase quality even further.

Non-homogeneous media may be simulated by adding noise to distance map. However, it may not work for different camera angles and observer positions so some sort of raymarching through volume would be necessary for realistic result.

When considering the atmosphere in a game, most people think about actual gameplay and story, and that’s what is most important. But about that some other time.

Some reading on the subject:

- Crytek’s Real-time atmospheric effects in games

http://developer.amd.com/wordpress/media/2012/10/Wenzel-Real-time_Atmospheric_Effects_in_Games.pdf - Better fog

http://www.iquilezles.org/www/articles/fog/fog.htm - A practical analytic single scattering model for real-time rendering

http://www.cs.berkeley.edu/~ravir/papers/singlescat/scattering.pdf